Abstract

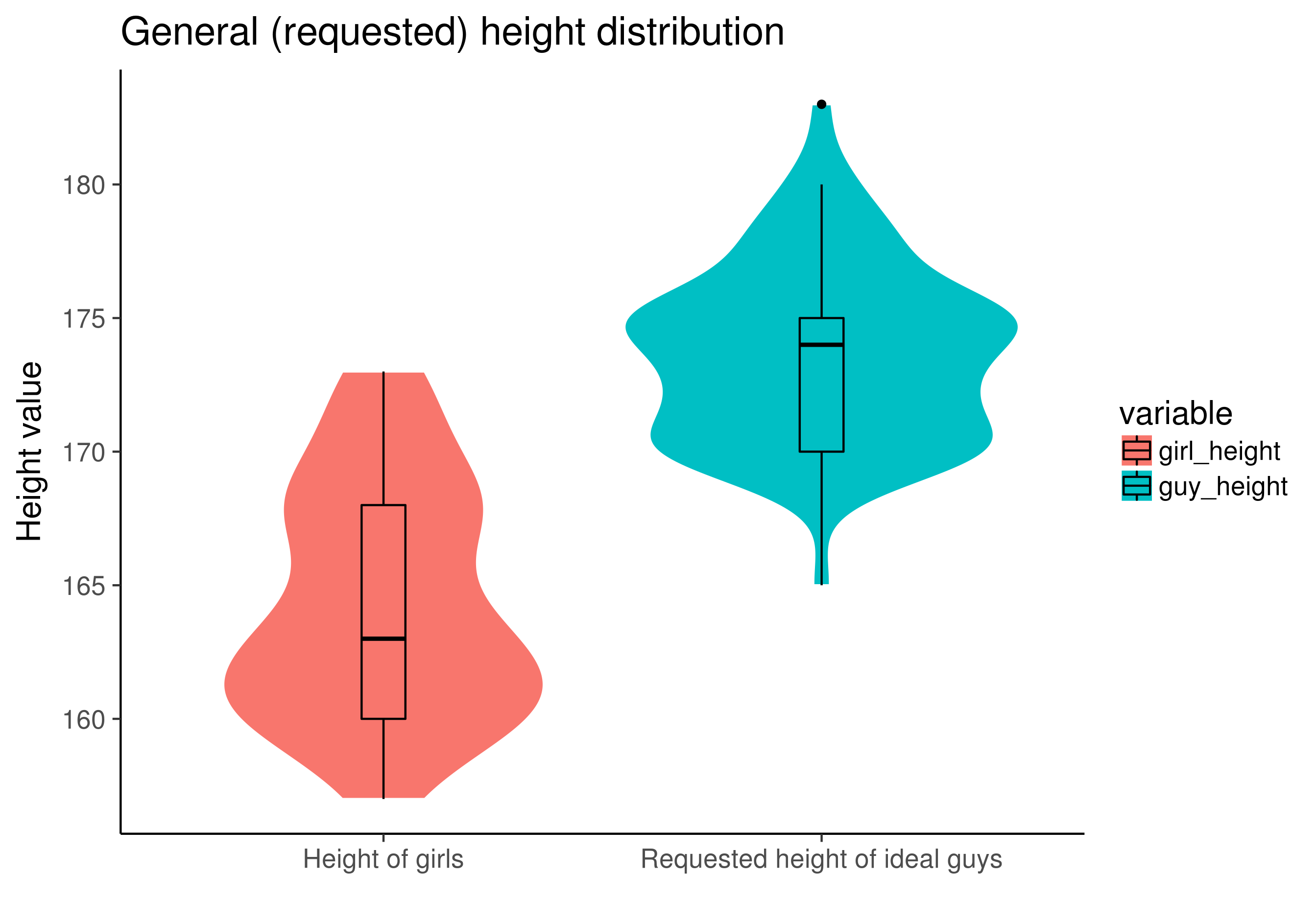

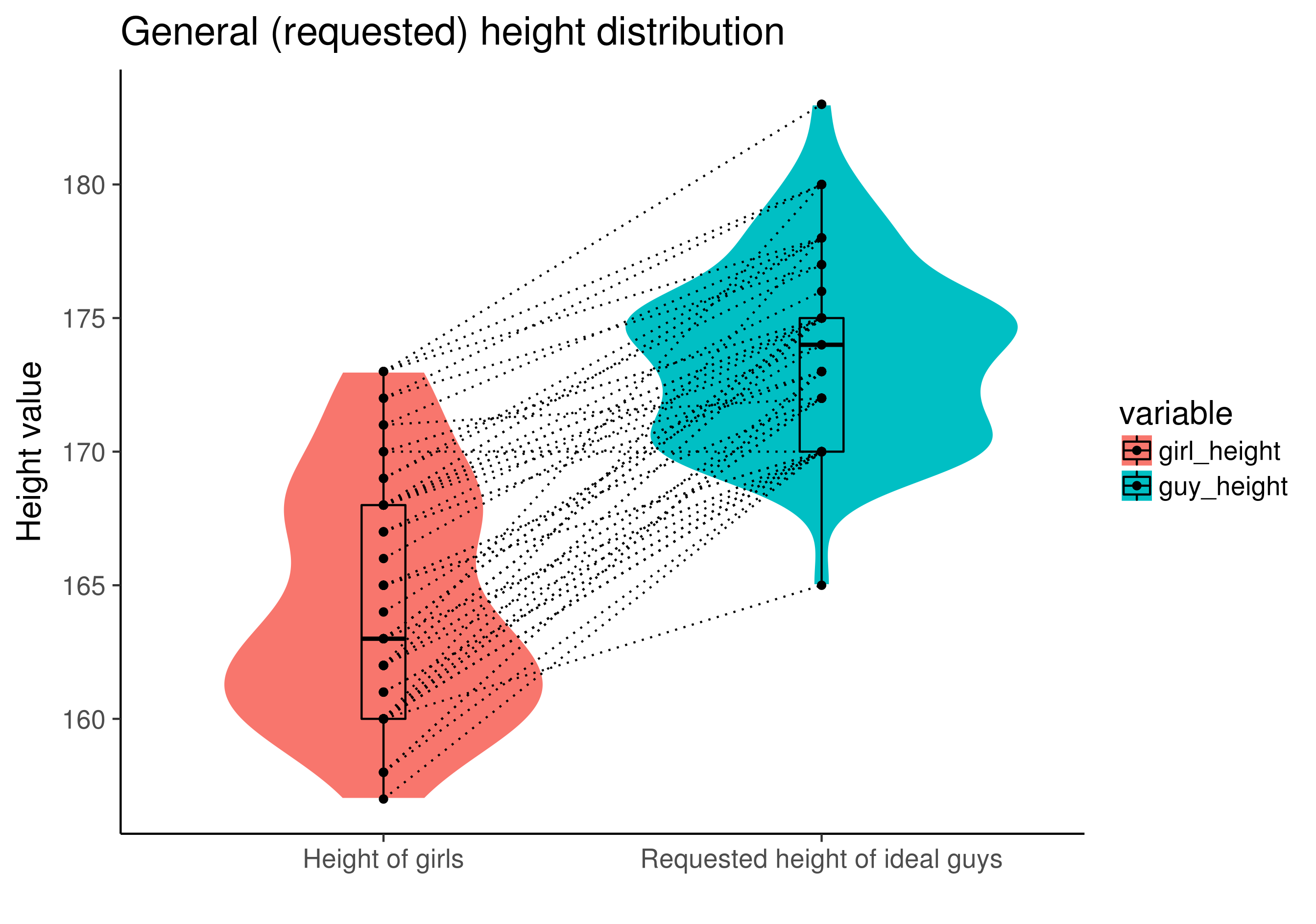

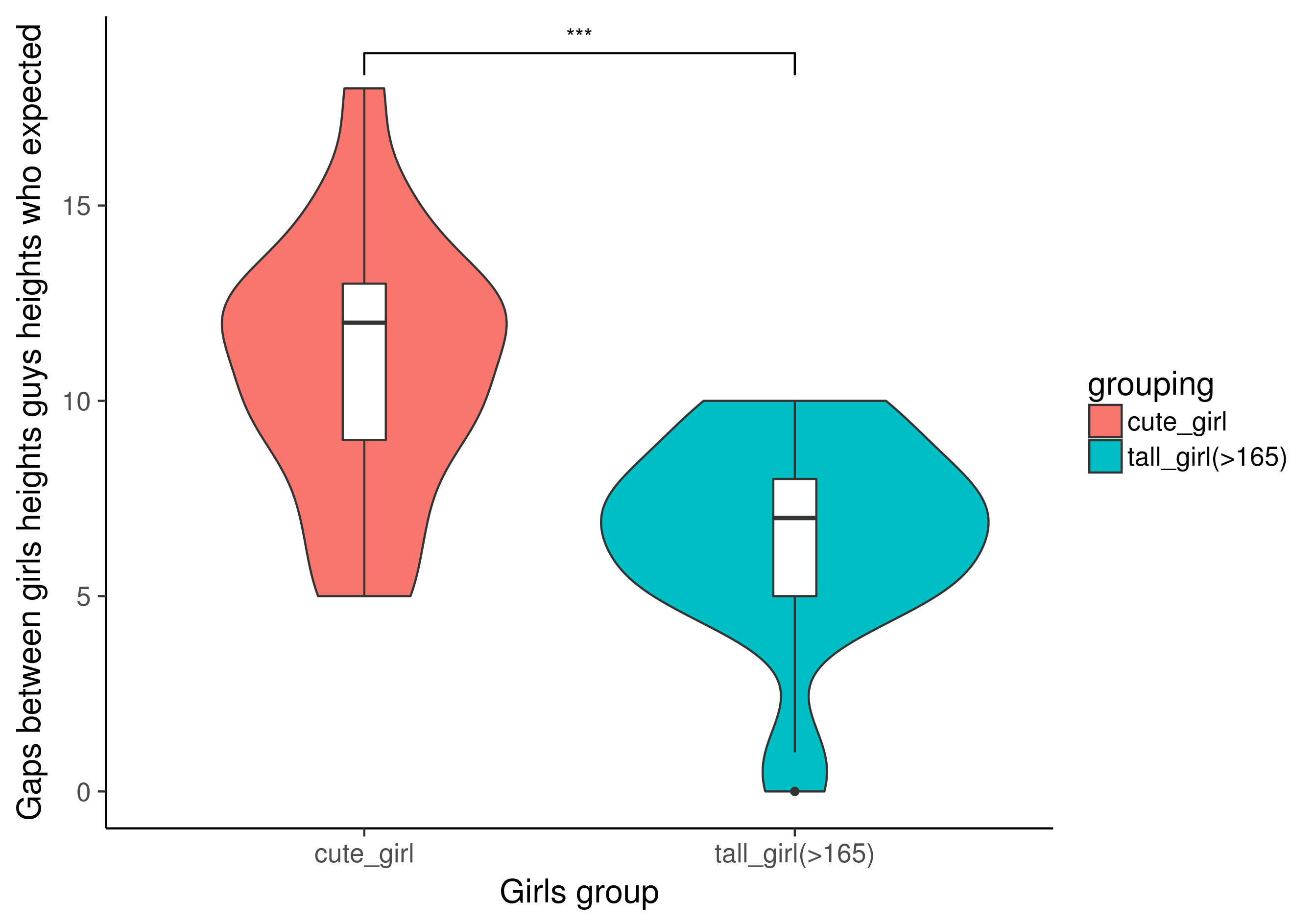

I noticed that most girls seems to prefer a tall guy (eg. > 170) on the PKU bbs,

thus the short ones (like me… sad face) could easily end up alone? LOL.

Anyway, I cannot draw any conclusion until I have the data.

So, let’s play.

Setup analyse environment

I planned to use python3 tools like scrapy to acquire “clean data” from the website,

and use pandas to organize data and jieba for Chinese text segmentation.

Finally, I’d like to use R::tidyverse to plot :P .

Since the packages dependencies could be too much works, I prefer to start over with a brand new environment using Anaconda.

1 | conda create -n web_data_analyse python=3.6 |

Use scrapy spider to get websites

The tutorial for scrapy spiders are shown here.

after a pretty long time struggling in these documentations, … I’ve got a spider!

spider.py

1 | # -*- encoding: utf-8 -*- |

While PostItem class was defined in item.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16# -*- coding: utf-8 -*-

# Define here the models for your scraped items

#

# See documentation in:

# https://doc.scrapy.org/en/latest/topics/items.html

import scrapy

class PostItem(scrapy.Item):

# define the fields for your item here like:

# name = scrapy.Field()

post_id = scrapy.Field()

publish_time = scrapy.Field()

post_url = scrapy.Field()

content = scrapy.Field()

After running the command line tool1

scrapy crawl bbs_heights -o bbs.jl

I’ve acquired all the posts contents I want within 7 days on the BBS!

Since the data was exported in jsonline format, I have to install an additional packages for easily reading jsonlines.1

pip install jsonlines

Parse contents to height requests data

Data cleaning

The data we scraped is well-organized yet not suitable enough for downstream analysis.

So, I tried some ways to do the data laundry and make sense of it.

Parse contents to words

Download jieba for Chinese text segmentation1

pip install jieba

After remove the stopwords, the remained words seemed prettier. However, I was hoping that text segmentation would help me to get some key information to understand the context of each height data. The fact is, it cannot. Or I just haven’t get the main idea of analysing linguistic data.

Extract height data

Use re, of course.

However, it’s trickier than I expected. For some of the heights text are pretty strange and funny, like 165cm60kg or 一七六. I choose to neglect the Chinese character version of heights and focus on extract serveral funny forms of numeric heights.

Well… in the end I’ve come up with a solution, it’s not elegant I have to admit, but it works (for now).

The function are shown below.

1 | # value words detection |

You can see some “trying efforts” in it…

Plots & Conclusions

Well, cut to the chase.

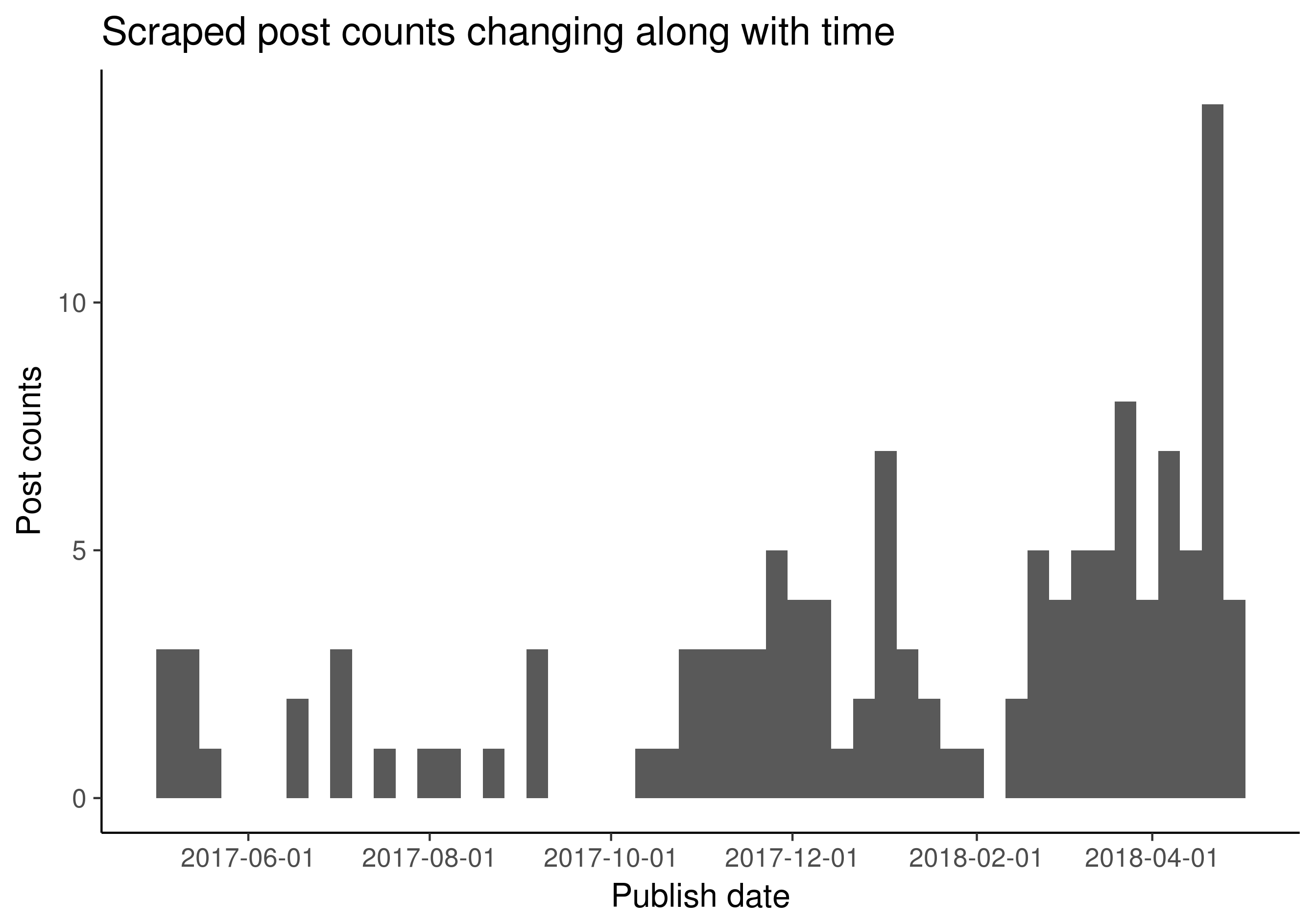

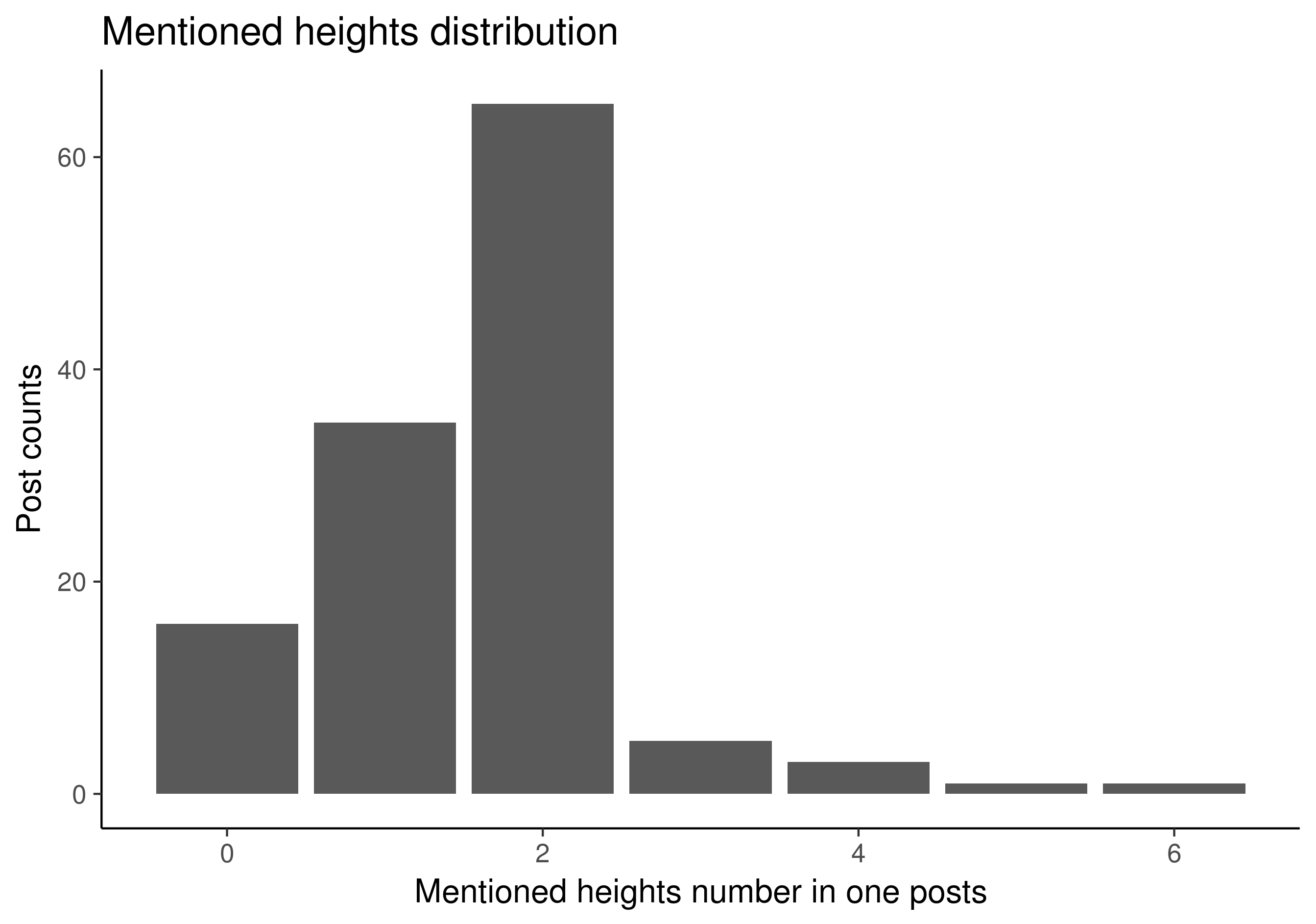

I’ve plot the post counts along the publish data and the mentioned heights distribution trying to get a general idea of the data set.

And the key point, which is whether the girls prefer guys who are over 170 cm, was demonstrated pretty clear in fig3&fig4, I think.

Basic analysis were writen in my post on PKUBBS.

I am NOT going to repeat here, too much trouble…

版权声明: 本博客所有文章除特别声明外,均采用CC BY-NC-SA 3.0 CN许可协议。转载请注明出处!